You might be already wondering about what 'Diego' is. If so, this might be a very useful post for newbies in Cloud Foundry. So what 'Diego' actually is? How is it related to Cloud Foundry? How is it different from build-pack mechanism? Please be patient. You will soon find solutions to all your problems. Let's proceed then.

What is Diego

In a Cloud Foundry deployment without Diego, the Cloud Controller schedules and manages applications on the Droplet Execution Agent (DEA) nodes. Diego replaces the DEAs and the Health Manager, and assumes application scheduling and management responsibility from the Cloud Controller. Diego combines scheduler, runner and health manager. DEA, Health manager and Warden were rewritten in 'Go' , DEA + GO , hence it is called 'Diego'.

Diego is a rewrite of the Cloud Foundry runtime. Why this was written again? The existing code is hard to maintain and add features. In case of the DEA and Health Manager, which Diego removes the need for, the code was too enmeshed with the Cloud Foundry code-base. So they wrote Cloud Foundry again in a different way which is now called 'Diego'.

At its core, Diego is a distributed system that orchestrates containerized workloads. I will describe each of these terms separately.

distributed system ?

If you look into running Diego installation, you will see a pile of VMs which you call 'cells'. They have containers running applications in them. There is also a handful of highly available VMs called 'Brain'. Then there are few machines called BBS which acts as a centralized data store that we use to coordinate information in a cluster. This is how Diego becomes a distributed system.

orchestrates?

Diego's orchestration is responsible for two things.

1. Diego is a scheduler. So when you bring your droplet to Diego, Diego tries to optimally distributes it across running cells.

2. Meanwhile, Diego implements health monitoring. So if your application crashes, Diego notices and restarts it. If your entire cell crashes, Diego will notice and save those applications.

2. Meanwhile, Diego implements health monitoring. So if your application crashes, Diego notices and restarts it. If your entire cell crashes, Diego will notice and save those applications.

containerized workloads?

off-task is a unit of work that runs at most once inside a container. LRP or Long Running Process is complex. There can be 'N' long running instances that distributes across cells for high availability. There are LRPs and Tasks as well. This generic platform independent abstraction describes what Diego can do. Diego takes droplet which is the product of running 'cf push' and run buildpack based application on Diego. We are able to use same abstraction for docker based applications on Diego.

Diego is responsible for the uptime of applications. It can stream output from the application processes and ensures the routing of requests to those applications.

How Diego is related with Docker

Docker is a container engine that makes it easy to back, ship and run software inside containers. Cloud Foundry has been employing containers since a long time to run applications. We might want to run multiple instances of same app, that's where containers come into help. Cloud Foundry uses 'garden' technology engine to run these containers.

So why use Docker? As we know, Docker provides a convenient way for distributing containers. It defines something called 'image' which we can push to 'Docker Hub', so that the whole community can make use of it.

So why use Docker? As we know, Docker provides a convenient way for distributing containers. It defines something called 'image' which we can push to 'Docker Hub', so that the whole community can make use of it.

So if Docker is that good at distributed containers, why not combine the two: Cloud Foundry and Docker. Diego is an approach taken by combining these two technologies.

Diego Architecture

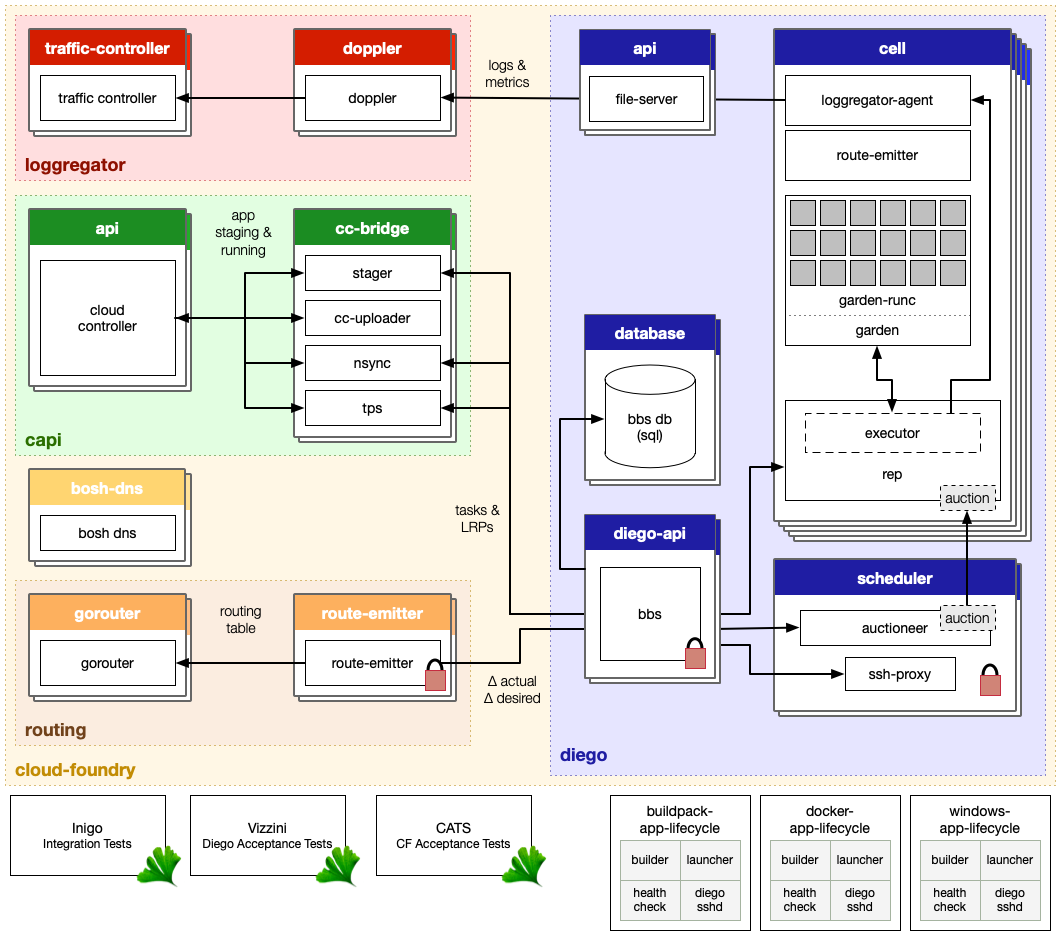

- The Cloud Controller passes requests to stage and run applications to the Cloud Controller Bridge (CC-Bridge).

- The CC-Bridge translates staging and running requests into Tasks and Long Running Processes(LRPs), then submits these to the Bulletin Board System (BBS) through an RPC-style API over HTTP. BBS acts as a centralized data store that we use to coordinate information in a cluster.

- The BBS submits the Tasks and LRPs to the Auctioneer which is a part of the Diego Brain.

- The Auctioneer distributes these Tasks and LRPs to Cells through an Auction.

- Once the Auctioneer assigns a Task or LRP to a Cell, an in-process Executor creates a Garden container in the Cell. The Task or LRP runs in the container.

- The BBS tracks desired LRPs, running LRP instances and in-flight Tasks for the Converger which is a part of the Diego Brain. The Converger periodically analyzes this information and corrects discrepancies to ensure consistency between ActualLRP and DesiredLRP counts.

- Metron Agents which are part of the Cells, forward application logs, errors, and metrics to the Cloud Foundry Loggregator.

- Loggregator is the Cloud Foundry component responsible for logging, provides a stream of log output from your application and from Cloud Foundry system components that interact with your app during updates and execution. By default, Loggregator streams logs to your terminal. If you want to persist more than the limited amount of logging information that Loggregator can buffer, you can drain logs to a third-party log management service. Cloud Foundry gathers and stores logs in a best-effort manner.

1. Garden allows Diego to pragmatically say three things. That is make me a container, put this in it, now go run this.This is done though a platform-agnostic API.'

2. Garden allows Diego's abstractions to be flexible.

Each time you make an app, it has to be staged. So we have a bridge component in the middle which does translations between different domains. When staging an application, Cloud Controller fires a 'stage app' request. This hits the stager. Stager translates it to a task that Diego runs. If this succeeds, then task is started and application can be run. 'Run app' request is hit by Nsync and Nsync translates it to a LRP(Long Running Process) that represents the application. LRP is managed by Diego.

Diego has matured that it can run your containers natively without having to install anything. You have to install Diego CLI that makes pushing these images easily. Diego CLI is installed with cf-CLI. If you want to run your Docker containers using Diego native support, you have to do,

cf push <app-name> -o <publicly available image in Docker Hub>.

Otherwise you can deploy Diego to Bosh-Lite so that you can get the experience of deploying Docker images locally in your machine.

Key thing here is that Diego does not actually use Docker deamon to run an image. Rather than that, it just simulates Docker technology by using its own Garden tooling.

Problems

Diego goes to internet, pulls out all bits of docker image and fires a container. Following problems came up with this approach initially.

1. Unpredictable scaling

2. Private images

3. Performance

1. Unpredictable scaling

Suppose you have started two instances of a certain publicly available image that you find in Docker Hub. These two instances have been running healthy for weeks and now you decide to scale them up. So you navigate to the console and type the following command.

'cf scale -i <no of instance> <app-name>

This tells Diego 'please start me this number of instances'. Then Diego goes to the internet, pulls latest version and completes your request. If in the meantime, the application provider decides to release a new version and push it to Docker Hub, then you will still end up running only two instances of that application because the previous version of that application is no longer supported. Thus you will have instances of both old version and new version. This is called unpredictable scaling.

2. Performance

Another more obvious problem is performance. This approach is poorly sub optimal to each and every request. When scaling the number of instances, it goes and downloads the whole Docker image for each and every instance. We know that containers tend to be bigger. So this approach is not optimal.

3. Private images

Diego lacks the private image support. Private images are available in Docker Hub, but they are protected by credentials. So if you want to support private images, then you need to deal with credentials. That's tricky. So either time you have to prompt user asking for credentials or else you have to steal credentials from database. This can be a problem if you are in an organization that decides to release your proprietary app in Docker Hub.

Solution

In order to solve the aforementioned problems, Cloud Foundry community came up with 'Private Docker Registry'. It is a registry for Docker images which is like Docker Hub. This runs only in Private Diego network. This is not accessible for developers and users. No one can push or pull images from this registry. It is there solely for the purpose of access by Diego. Hence Diego uses its as a cache. Let's see how this concept of 'Private Docker Registry' solves the previously mentioned issues. Suppose you want to start up your Docker app with this caching support.1. Unpredictable scaling

2. Performance

3. Private images

That's how private registry solves the aforementioned problems. I supose that this post would have been useful to understand how Diego works in Cloud Foundry.

.svg/1255px-Go,_Diego,_Go!_(logo).svg.png)